Lecture Materials

4. Linear algebra#

Linear systems of equations#

⚠️ Warning: The following Python examples are intended for educating concepts rather than for practical use. For practical implementations, consider using standard libraries such as

numpy,scipyorlinalg.

Systems of linear equations appear in multitude of applications and solving them efficiently is important. Linear systems are ubiquitous in computational physics, such as solving circuit equations, performing data fitting, applying numerical solvers, and modeling physical systems.

Generally a system of \(N\) linear equations corresponds to the following construction

which can be written in a matrix form

where

A unique solution to the system of equations exists if they are all linearly independent. Equivalently, this is the case if the determinant of matrix \(\mathbf{A}\) is non-zero, \(\det \mathbf{A} \neq 0\).

There are many efficient libraries that exist to solve systems of linear equations efficiently, and these should be used. Nevertheless, going over the basic methods for solving linear equations is important to understand the concepts, limitations, and possible issues and solutions to them.

Gaussian elimination#

Gaussian elimination is the most basic approach of solving systems of linear equations.

It is based on the fact that the following two operations leave the system of equations equivalent.

One can multiply any equation by a constant (non-zero) factor, and it will still be the same system of equations with the same solution.

One can take any linear combination of two (or more) equations to get another correct equation. This new equation can replace any of the equations entering this linear combinations. In other words, we can subtract from any of the equations any other equation, and the resulting system of linear equation will stay equivalent to what we had before.

Gaussian elimination simplifies a system of linear equations by systematically eliminating variables from equations, reducing the system to an upper triangular form. Let us take a system of 4 equations as an example

We start from the first row. We divide the row by \(a_{11}\) such that its diagonal element is equal to unity

Then, we make all entries in the first column below the main diagonal to go to zero. To achieve that we subtract the first equation multiplied by \(a_{j2}\) from the \(j\)th equation:

which we can denote as

Repeat steps 1-2 to make all elements below the main diagonal in the 2nd column go to zero

Repeat until all elements below the main diagonal are zero and all diagonal elements are equal to unity

Example: System of equations

becomes

Backsubstitution#

In the end we have the following system of equations

The solution now is trivial and proceeds through backsubstition, starting from

to

to

and finally to

The algorithm complexity is \(O(n^3)\). One limitation of simple Gaussian elimination is its susceptibility to numerical instability, especially for ill-conditioned matrices.

import numpy as np

def linsolve_gaussian(A0, v0):

# Initialization

A = A0.copy()

v = v0.copy()

# A = A0

# v = v0

N = len(v)

# Gaussian elimination

for r in range(N):

# Divide the current row by the pivot (diagonal element)

# Ensure diagonal element is non-zero to proceed

div = A[r,r]

if (div == 0.):

print("Diagonal element is zero! Cannot solve the system with simple Gaussian elimination")

return None

A[r,:] /= div

v[r] /= div

# Now subtract this row from the lower rows

for r2 in range(r+1,N):

mult = A[r2,r]

A[r2,:] -= mult * A[r,:]

v[r2] -= mult * v[r]

# Backsubstitution

x = np.empty(N,float)

for r in range(N-1,-1,-1):

x[r] = v[r]

for c in range(r+1,N):

x[r] -= A[r][c] * x[c]

return x

Here, we solve a system of 4 linear equations using the Gaussian elimination algorithm. The solution is verified by checking if \(\mathbf{A} \mathbf{x} = \mathbf{v}\).

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_gaussian(A,v)

print(A)

print('x =',x)

print('Ax =', A.dot(x))

print('v =', v)

[[ 2. 1. 4. 1.]

[ 3. 4. -1. -1.]

[ 1. -4. 1. 5.]

[ 2. -2. 1. 3.]]

x = [ 2. -1. -2. 1.]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

Pivoting#

Simple Gaussian elimination relies on the diagonal element of the present row being non-zero. This is not always the case: the diagonal element could be zero even in non-singular systems that have a perfectly valid solution. Simple Gaussian elimination will fail.

Consider our example where we set the very first element to zero

The system has a solution but the solver will fail

A = np.array([[ 0, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_gaussian(A,v)

print('x =',x)

print('Ax =', A.dot(x))

print('v =', v)

Diagonal element is zero! Cannot solve the system with simple Gaussian elimination

x = None

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

Cell In[3], line 8

6 x = linsolve_gaussian(A,v)

7 print('x =',x)

----> 8 print('Ax =', A.dot(x))

9 print('v =', v)

TypeError: unsupported operand type(s) for *: 'float' and 'NoneType'

The solution here is to apply the so-called partial pivoting. Pivoting involves swapping rows in the matrix to ensure that the largest possible pivot element is used at each step of Gaussian elimination. This reduces round-off errors and improves stability.

Recall that the system of equations does not change if one swaps any two equations (rows). The solution thus is to swap the current row, if its diagonal element is zero, with one of the lower rows where the corresponding element is non-zero. (If all elements in all rows below are zero, we are dealing with a singular matrix that has no solutions).

In practice, at each step one chooses the row where the pivot element is largest in magnitude, even the pivot of the present row is non-zero. In this way one minimizes round-off error associated with the subtraction of large numbers. In our example from above,

we will swap the first and second rows to obtain

This procedure is performed at each step. To implement partial pivoting we will keep track of all the row swaps by keeping a map between the original row numbers and the current ones.

def linsolve_gaussian_partialpivot(A0, v0):

# Initialization

A = A0.copy()

v = v0.copy()

N = len(v)

# Gaussian elimination

for r in range(N):

# Find the pivot element (largest in magnitude)

r_pivot = r

for i in range(r + 1, N):

if (abs(A[i][r]) > abs(A[r_pivot][r])):

r_pivot = i

# Swap rows to move the largest pivot element to the diagonal

A[[r,r_pivot]] = A[[r_pivot,r]]

v[[r,r_pivot]] = v[[r_pivot,r]]

# Divide row r by the pivot element

div = A[r,r]

if (div == 0.):

print("Diagonal element is zero! The system appears to be singular")

return None

A[r,:] /= div

v[r] /= div

# Now subtract this row from the lower rows

for r2 in range(r+1,N):

mult = A[r2,r]

A[r2,:] -= mult * A[r,:]

v[r2] -= mult * v[r]

# Backsubstitution

x = np.empty(N,float)

for r in range(N-1,-1,-1):

x[r] = v[r]

for c in range(r+1,N):

x[r] -= A[r][c] * x[c]

return x

In this example, we solve a system of linear equations using Gaussian elimination with partial pivoting. The results show that the solution is accurate, even when a diagonal element starts at zero.

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_gaussian_partialpivot(A,v)

print('x =',x)

print('Ax =', A.dot(x))

print('v =', v)

x = [ 2. -1. -2. 1.]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

A = np.array([[ 0, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_gaussian_partialpivot(A,v)

print('x =',x)

print('Ax =', A.dot(x))

print('v =', v)

x = [ 1.61904762 -0.42857143 -1.23809524 1.38095238]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

LU decomposition#

Gaussian elimination#

Now that we have explored Gaussian elimination and pivoting, we move to LU decomposition, which builds upon these concepts for obtaining efficient solutions.

LU decomposition factors \(\mathbf{A}\) into a lower triangular matrix \(\mathbf{L}\) and an upper triangular matrix \(\mathbf{U}\), simplifying the solution of systems of equations. This is particularly useful when the coefficient matrix \(\mathbf{A}\) remains constant while the right-hand side \(\mathbf{v}\) changes.

LU decomposition is the following representation of matrix \(\mathbf{A}\):

where \(\mathbf{L}\) and \(\mathbf{U}\) are lower and upper triangular matrices, respectively.

At the end of Gaussian elimination our matrix became upper triangular

Discarding pivoting for a moment, all steps of the Gaussian elimination can be represented by matrix multiplication, i.e.

where e.g.

and so on.

These are lower triangular matrices. Their inverses are also lower triangular matrices

and so on.

Matrix \(\mathbf{A}\) can therefore be represented as

where

This is the \(LU\)-decomposition of our matrix into a product of lower and upper triangular matrices. It is trivial to modify the Gaussian elimination code to calculate \(L\) and \(U\) matrices.

def lu_decomp(A):

# Initialization

U = A.copy()

N = len(A[0])

L = np.zeros((N,N), float)

# Gaussian elimination

for r in range(N):

# Record elements of the lower triangular matrix L

for r2 in range(r,N):

L[r2][r] = U[r2][r]

# Divide row r by diagonal element

div = U[r,r]

if (div == 0.):

print("Diagonal element is zero! LU decomposition without pivoting is not possible!")

return None

U[r,:] /= div

# Now subtract this row from the lower rows

for r2 in range(r+1,N):

mult = U[r2,r]

U[r2,:] -= mult * U[r,:]

return L, U

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

L, U = lu_decomp(A)

print('L =', L)

print('U =', U)

print('LU =', np.dot(L,U))

print('A =', A)

L = [[ 2. 0. 0. 0. ]

[ 3. 2.5 0. 0. ]

[ 1. -4.5 -13.6 0. ]

[ 2. -3. -11.4 -1. ]]

U = [[ 1. 0.5 2. 0.5]

[ 0. 1. -2.8 -1. ]

[-0. -0. 1. -0. ]

[-0. -0. -0. 1. ]]

LU = [[ 2. 1. 4. 1.]

[ 3. 4. -1. -1.]

[ 1. -4. 1. 5.]

[ 2. -2. 1. 3.]]

A = [[ 2. 1. 4. 1.]

[ 3. 4. -1. -1.]

[ 1. -4. 1. 5.]

[ 2. -2. 1. 3.]]

Repeated solutions of linear equations#

LU decomposition is useful for repeated solution of systems of linear equations

when the matrix \(\mathbf{A}\) stays the same but where the vector \(\mathbf{v}\) can change.

Indeed, the system of equations becomes

Let us define

then

We can solve the system for \(\mathbf{x}\) in two steps.

First we solve the equation \(\mathbf{L y} = \mathbf{v}\) using forward substitution, in analogy to backsubstitution we used before.

Once we have \(\mathbf{y}\), we can solve \(\mathbf{Ux} = \mathbf{y}\) for \(\mathbf{x}\) using backsubstitution.

def solve_using_lu(L,U,v):

# L*U*x = v

# First solve L*y = v with forward substitution

# Then solve U*x = y with backsubstitution

# Initialization

N = len(v)

# Forward substitution for L*y = v

y = np.empty(N,float)

for r in range(N):

y[r] = v[r]

for c in range(r):

y[r] -= L[r][r - 1 - c] * y[r - 1 - c]

y[r] /= L[r][r]

# Backsubstitution for U*x = y

x = np.empty(N,float)

for r in range(N-1,-1,-1):

x[r] = y[r]

for c in range(r+1,N):

x[r] -= U[r][c] * x[c]

return x

Let us apply the \(LU\)-decomposition to solve a system of linear equations

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

L, U = lu_decomp(A)

v = np.array([ -4, 3, 9, 7 ],float)

x = solve_using_lu(L,U,v)

print('x =', x)

print('Ax =', A.dot(x))

print('v =', v)

x = [ 2. -1. -2. 1.]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

The computational complexity of LU decomposition is \(O(n^3)\), the same as Gaussian elimination. However, for repeated solutions with different \(\mathbf{v}\), the decomposition step \(O(n^3)\) is done only once, while the forward and back substitution steps which are done for each \(\mathbf{v}\) have a lower complexity, \(O(n^2)\).

\(LU\) decomposition with pivoting#

Not every non-singular matrix allows for \(LU\)-decomposition because diagonal elements can be zero. In general case we need to allow the possibility to perform partial pivoting by exchanging the rows of our matrix. If we do that, what we get \(LU\)-decomposition with pivoting which can be written as

The permutation matrix \(\mathbf{P}\) records the row swaps performed during partial pivoting, ensuring that the LU decomposition is valid for singular and near-singular matrices.

Solving the system of equations

is also straightforward using forward and backsubstitution passes, except that we have to exchange the rows in the vector \(\mathbf{v}\) to account for the row swaps that we did.

In cases where diagonal elements are zero or very small, partial pivoting improves numerical stability by selecting the largest pivot element, reducing round-off errors.

def lu_decomp_partialpivot(A):

# Initialization

U = A.copy()

N = len(A[0])

L = np.zeros((N,N), float)

# Keep track of all row swaps

row_map = [i for i in range(N)]

# Gaussian elimination

for r in range(N):

# Find the pivot element (largest in magnitude)

r_pivot = r

for i in range(r + 1, N):

if (abs(U[i][r]) > abs(U[r_pivot][r])):

r_pivot = i

row_map[r], row_map[r_pivot] = row_map[r_pivot], row_map[r]

U[[r,r_pivot]] = U[[r_pivot,r]]

L[[r,r_pivot]] = L[[r_pivot,r]]

# Record the elements of L

for r2 in range(r,N):

L[r2][r] = U[r2][r]

# Divide row r by the pivot element

div = U[r,r]

if (div == 0.):

print("Diagonal element is zero! The system appears to be singular")

return None

U[r,:] /= div

# Now subtract this row from the lower rows

for r2 in range(r+1,N):

mult = U[r2,r]

U[r2,:] -= mult * U[r,:]

return L, U, row_map

Let us apply \(LU\)-decomposition with partial pivoting to solve a system of linear equations

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

L, U, row_map = lu_decomp_partialpivot(A)

print('L =', L)

print('U =', U)

print('LU =', np.dot(L,U))

print('A =', A)

L = [[ 3. 0. 0. 0. ]

[ 1. -5.33333333 0. 0. ]

[ 2. -1.66666667 4.25 0. ]

[ 2. -4.66666667 0.5 -1. ]]

U = [[ 1. 1.33333333 -0.33333333 -0.33333333]

[-0. 1. -0.25 -1. ]

[ 0. 0. 1. 0. ]

[-0. -0. -0. 1. ]]

LU = [[ 3. 4. -1. -1.]

[ 1. -4. 1. 5.]

[ 2. 1. 4. 1.]

[ 2. -2. 1. 3.]]

A = [[ 2. 1. 4. 1.]

[ 3. 4. -1. -1.]

[ 1. -4. 1. 5.]

[ 2. -2. 1. 3.]]

The matrices A and L*U coincide up to a permutation of rows, as they should.

def solve_using_lu_partialpivot(L,U,row_map,v):

# L*U*x = v

# First solve L*y = v with forward substitution

# Then solve U*x = y with backsubstitution

# Initialization

N = len(v)

# Backsubstitution for L*y = v

y = np.empty(N,float)

for rr in range(N):

r = row_map[rr]

y[rr] = v[r]

for c in range(rr):

y[rr] -= L[rr][rr - 1 - c] * y[rr - 1 - c]

y[rr] /= L[rr][rr]

# Backsubstitution for U*x = y

x = np.empty(N,float)

for rr in range(N-1,-1,-1):

x[rr] = y[rr]

for c in range(rr+1,N):

x[rr] -= U[rr][c] * x[c]

return x

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

L, U, row_map = lu_decomp_partialpivot(A)

v = np.array([ -4, 3, 9, 7 ],float)

x = solve_using_lu_partialpivot(L,U,row_map,v)

print('x =', x)

print('Ax =', A.dot(x))

print('v =', v)

x = [ 2. -1. -2. 1.]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

Now let us consider an example where the first diagonal element is zero

A = np.array([[ 0, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

L, U, row_map = lu_decomp_partialpivot(A)

v = np.array([ -4, 3, 9, 7 ],float)

x = solve_using_lu_partialpivot(L,U,row_map,v)

print('x =', x)

print('Ax =', A.dot(x))

print('v =', v)

x = [ 1.61904762 -0.42857143 -1.23809524 1.38095238]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

Matrix inverse#

The inverse \(\mathbf{A}^{-1}\) of matrix \(\mathbf{A}\) satisfies

We can therefore evaluate \(\mathbf{A}^{-1}\) by solving \(N\) systems of linear equations

where

and \(\mathbf{x}_k\) is the \(k\)th column of the inverse matrix \(\mathbf{A}^{-1}\). Note that each equation has the same matrix \(\mathbf{A}\), therefore, we can reuse the \(LU\)-decomposition \(\mathbf{A} = \mathbf{L U}\).

The computational complexity of inverting a matrix using LU decomposition is \(O(n^3)\), the same as direct methods. However, LU decomposition is more efficient for reuse when solving multiple systems of equations.

def matrix_inverse_with_ludecomp(A):

# First step: LU decomposition of matrix A

L, U, row_map = lu_decomp_partialpivot(A)

N = len(row_map)

Ainv = A.copy()

for c in range(N):

v = np.zeros(N, float)

v[c] = 1.

x = solve_using_lu_partialpivot(L,U,row_map,v)

Ainv[:,c] = x

return Ainv

In this section, we compute the matrix inverse using LU decomposition and verify the result by multiplying the matrix with its inverse. The result should be approximately the identity matrix.

Test it with our matrix

# if unavailable run

# pip3 install tabulate

from tabulate import tabulate

A = np.array([[ 0, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

Ainv = matrix_inverse_with_ludecomp(A)

print("A*A^{-1} = \n", tabulate(A.dot(Ainv)))

A*A^{-1} =

------------ ------------ ------------ ------------

1 -5.55112e-17 1.11022e-16 -1.11022e-16

-2.77556e-17 1 -1.11022e-16 3.33067e-16

2.77556e-17 5.55112e-17 1 4.44089e-16

-2.77556e-17 -5.55112e-17 -5.55112e-16 1

------------ ------------ ------------ ------------

Here, we test the matrix inversion with a random matrix of a larger size to evaluate the generality and scalability of the method.

# A is a square random matrix of size n

n = 8

A = np.random.rand(n, n)

Ainv = matrix_inverse_with_ludecomp(A)

print("A*A^{-1} =\n", tabulate(A.dot(Ainv)))

A*A^{-1} =

------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------

1 8.98446e-16 -3.63825e-16 9.68115e-16 3.8367e-16 2.76082e-17 -7.86275e-17 -1.46751e-17

-1.60556e-15 1 1.19598e-16 1.82986e-15 2.04425e-15 -5.52896e-16 3.44812e-16 -2.71546e-16

-6.24002e-15 6.43916e-15 1 -3.54889e-16 3.45216e-15 1.04332e-15 7.18617e-16 -1.48669e-16

-3.54857e-15 -1.22857e-17 7.52131e-16 1 6.61569e-15 -4.66744e-17 2.41854e-16 -3.33483e-16

-1.21877e-15 3.08609e-16 -3.19392e-16 8.83581e-16 1 -1.4637e-16 2.12937e-17 6.28979e-16

-2.82632e-15 6.55297e-15 4.14196e-16 3.40542e-15 -1.68958e-15 1 4.43654e-16 -9.26614e-16

-3.07309e-15 3.65747e-15 -8.80142e-16 -1.05223e-15 -3.50322e-16 1.67612e-16 1 2.25891e-16

8.91805e-16 -5.89908e-16 6.15895e-16 2.77971e-15 -1.6479e-15 9.29913e-16 2.06331e-16 1

------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------

Special systems#

Tridiagonal systems#

A particular case of linear systems of equations is tridiagonal systems. In this case the matrix \(\mathbf{A}\) has non-zero elements only at the main diagonal, as well lower and upper subdiagonal:

Tridiagonal systems of linear equations often appear in physics, e.g.

Nearest-neighbor interaction (linear chain of springs)

Finite differences applied to partial differential equations (heat equation)

A tridiagonal system of linear equations can efficiently be solved using Gaussian elimination in linear time. This is because one only needs to process one row at a time (the rest already have zeros in the column), and at most two elements during the elimination process. Similarly, during the backsubstitution step, one only has to subtract a single element from the upper superdiagonal, i.e.

import numpy as np

# Solve tridiagonal system of linear equations

# d: vector of diagonal elements

# l: vector of elements on the lower subdiagonal

# u: vector of elements on the upper superdiagonal

# v0: right-hand-side vector

def linsolve_tridiagonal(d, l, u, v0):

# Initialization

N = len(v0)

a = d.copy() # Current diagonal elements

b = u.copy() # Current upper diagonal elements

v = v0.copy()

# Gaussian elimination

for r in range(N):

if (a[r] == 0.):

print("Diagonal element is zero! Cannot solve the tridiagonal system with simple Gaussian elimination")

return None

b[r] /= a[r]

v[r] /= a[r]

a[r] = 1.

if (r < N - 1):

a[r + 1] -= l[r+1] * b[r]

v[r + 1] -= l[r+1] * v[r]

# Backsubstitution

x = np.empty(N,float)

x[N - 1] = v[N - 1]

for r in range(N-2,-1,-1):

x[r] = v[r] - b[r] * x[r + 1]

return x

# Returns tridiagonal vectors d,l,u of matrix A

# See also scipy.sparse.diags at https://docs.scipy.org/doc/scipy/reference/generated/scipy.sparse.diags.html

def get_tridiagonal(A):

n = len(A[0])

d = np.zeros(n, float)

l = np.zeros(n, float)

u = np.zeros(n, float)

for r in range(n):

d[r] = A[r][r]

if (r > 0):

l[r] = A[r][r-1]

if (r < n - 1):

u[r] = A[r][r+1]

return d, l, u

A = np.array([[ 2, 1, 0, 0 ],

[ 3, 4, -5, 0 ],

[ 0, -4, 3, 5 ],

[ 0, 0, 1, 3 ]],float)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_tridiagonal(*(get_tridiagonal(A)), v)

print('x =', x)

print('Ax =', A.dot(x))

print('v =', v)

x = [-2.04 0.08 -1.76 2.92]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

Test on a random tridiagonal matrix

def random_tridiagonal(n):

A = np.random.rand(n, n)

for r in range(n):

for c in range(0,r-1):

A[r][c] = 0.

for c in range(r + 2, n):

A[r][c] = 0.

return A

%%time

n = 5000

A = random_tridiagonal(n)

# print("A =\n", tabulate(A))

v = np.random.rand(n)

x = linsolve_tridiagonal(*(get_tridiagonal(A)),v)

print(" x = ", x)

print("Ax = ", A.dot(x))

print(" v = ", v)

x = [-84.06637737 2.20015414 81.64291425 ... -0.29330997 0.11687412

0.79448278]

Ax = [0.01741138 0.34323675 0.90697728 ... 0.52674893 0.3843136 0.70488768]

v = [0.01741138 0.34323675 0.90697728 ... 0.52674893 0.3843136 0.70488768]

CPU times: user 2.54 s, sys: 50.2 ms, total: 2.59 s

Wall time: 2.57 s

The direct “brute force” solution will take much longer, about x20-30 times longer for this example.

%%time

L, U, row_map = lu_decomp_partialpivot(A)

x = solve_using_lu_partialpivot(L,U,row_map,v)

print(" x = ", x)

print("Ax = ", A.dot(x))

print(" v = ", v)

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

File <timed exec>:1

Cell In[11], line 37, in lu_decomp_partialpivot(A)

35 for r2 in range(r+1,N):

36 mult = U[r2,r]

---> 37 U[r2,:] -= mult * U[r,:]

39 return L, U, row_map

KeyboardInterrupt:

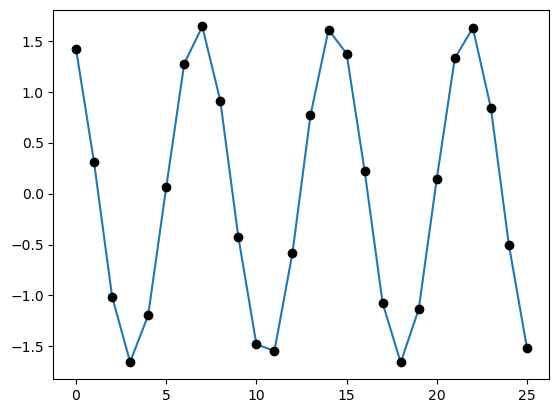

Let us apply it to the springs example from Section 6.1 of M. Newman Computational Physics

We have linear chain of strings. The amplitudes \(x_i\) of the vibration obey

from pylab import plot,show

# Constants

N = 26

C = 1.0

m = 1.0

k = 6.0

omega = 2.0

alpha = 2*k-m*omega*omega

# Set up the initial values of the arrays

A = np.empty([N,N],float)

for i in range(N):

if i>0:

A[i,i-1] = -k

A[i,i] = alpha

if i<N-1:

A[i,i+1] = -k

A[0,0] = alpha - k

A[N-1,N-1] = alpha - k

v = np.zeros(N,float)

v[0] = C

# Solve the equations

x = linsolve_tridiagonal(*(get_tridiagonal(A)),v)

# Make a plot using both dots and lines

plot(x)

plot(x,"ko")

show()

Banded systems#

Band-diagonal system: in each row

at most \(m_{\rm lower}\) non-zero elements to the left of the main diagonal

at most \(m_{\rm upper}\) non-zero elements to the right of the main diagonal

E.g. for \(m_{\rm lower} = m_{\rm upper} = 2\):

Solving band-diagonal system of equations also proceeds through Gaussian elimination. At each step one has to normalize \(m_{\rm upper} + 1\) elements in the current row, then subtract the current row from at most \(m_{\rm lower}\) rows below it. The overall complexity is thus \(O(N \, m_{\rm lower} \, m_{\rm upper})\). The procedure is thus very efficient when \(m_{\rm lower}, m_{\rm upper} \ll N\).

Tridiagonal matrix is a special case with \(m_{\rm lower} = m_{\rm upper} = 1\).

import numpy as np

# Solving linear system of banded equations

# d: diagonal elements of the banded matrix

# l: lower non-zero diagonals of the banded matrix

# u: upper non-zero diagonals of the banded matrix

# v0: r.h.s. vector

def linsolve_banded(d, l, u, v0):

# Initialization

v = v0.copy()

N = len(v)

mlower = len(l)

mupper = len(u)

a = d.copy() # Diagonal elements

dup = u.copy() # Upper diagonal elements

dlow = l.copy() # Lower diagonal elements

# Gaussian elimination

for r in range(N):

# Divide row r by diagonal element

div = a[r]

if (div == 0.):

print("Diagonal element is zero! Cannot solve the system with simple Gaussian elimination")

return None

for c in range(r+1,min(r + mupper + 1,N)):

dup[c-r-1,r] /= div

a[r] /= div

v[r] /= div

# Now subtract this row from the lower rows

# We do not need to go through all the rows

# but only up to a certain row depending

# on the number of non-zero elements to right and left of the main diagonal

max_row = min(r + mlower, N - 1)

# First the vector

for r2 in range(r+1,max_row + 1):

v[r2] -= dlow[r2 - r - 1, r2] * v[r]

# Then the matrix rows

for c in range(r + 1,min(r + mupper + 1,N)):

for r2 in range(r+1,max_row + 1):

if (c == r2):

a[r2] -= dlow[r2 - r - 1, r2] * dup[c-r-1,r]

elif (c < r2 and r2 - c - 1 < mlower):

dlow[r2 - c - 1,r2] -= dlow[r2 - r - 1, r2] * dup[c-r-1,r]

elif (c > r2 and c - r2 - 1 < mupper):

dup[c - r2 - 1,r2] -= dlow[r2 - r - 1, r2] * dup[c-r-1,r]

# Backsubstitution

x = np.empty(N,float)

for r in range(N-1,-1,-1):

x[r] = v[r]

for c in range(r+1,min(r + mupper + 1,N)):

x[r] -= dup[c - r - 1,r] * x[c]

return x

# Returns band-diagonal elements d,l,u of matrix A

# See also scipy.sparse.diags at https://docs.scipy.org/doc/scipy/reference/generated/scipy.sparse.diags.html

def get_banded(A, mlower, mupper):

n = len(A[0])

d = np.zeros(n, float)

l = np.zeros((mlower,n), float)

u = np.zeros((mupper,n), float)

for r in range(n):

d[r] = A[r][r]

for c in range(max(0,r - mlower),r):

l[r-c-1,r] = A[r][c]

for c in range(r+1,min(r+mupper+1,n)):

u[c-r-1,r] = A[r][c]

return d, l, u

A = np.array([[ 2, 1, 0, 0 ],

[ 3, 4, -5, 0 ],

[ 0, -4, 3, 5 ],

[ 0, 0, 1, 3 ]],float)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_banded(*(get_banded(A,1,1)), v)

print('x =', x)

print('Ax =', A.dot(x))

print('v =', v)

x = [-2.04 0.08 -1.76 2.92]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

def random_banded(n, mlower, mupper):

A = np.random.rand(n, n)

for r in range(n):

for c in range(0,r-mlower):

A[r][c] = 0.

for c in range(r + mupper + 1, n):

A[r][c] = 0.

return A

n = 10

mupper = 4

mlower = 3

A = random_banded(n, mlower, mupper)

print("A =\n", tabulate(A))

v = np.random.rand(n)

x = linsolve_banded(*(get_banded(A,mlower,mupper)),v)

print(" x = ", x)

print("Ax = ", A.dot(x))

print(" v = ", v)

A =

-------- --------- --------- -------- --------- -------- --------- --------- --------- ---------

0.927711 0.0790651 0.0188905 0.555866 0.0312183 0 0 0 0 0

0.974666 0.652129 0.216072 0.959026 0.840551 0.206254 0 0 0 0

0.952373 0.733931 0.86302 0.699586 0.239527 0.429038 0.34825 0 0 0

0.456639 0.0983185 0.357806 0.982559 0.134197 0.072791 0.828986 0.0261721 0 0

0 0.0106343 0.235725 0.427714 0.708464 0.130835 0.989054 0.374284 0.0855525 0

0 0 0.486943 0.122587 0.998602 0.481227 0.274853 0.518552 0.678703 0.543946

0 0 0 0.740597 0.992492 0.500756 0.0570351 0.942434 0.887561 0.0542594

0 0 0 0 0.30678 0.267396 0.914409 0.467686 0.613992 0.992708

0 0 0 0 0 0.312956 0.733908 0.87937 0.255656 0.752071

0 0 0 0 0 0 0.168861 0.765929 0.450349 0.471139

-------- --------- --------- -------- --------- -------- --------- --------- --------- ---------

x = [ 0.81679297 -1.284879 0.56020577 0.02437503 0.2374934 -0.95293083

0.37910797 1.30413495 -0.67343242 -0.10645937]

Ax = [0.68770425 0.10569409 0.11546628 0.78196038 0.9778565 0.31985306

0.42377179 0.25546934 0.87459033 0.70945525]

v = [0.68770425 0.10569409 0.11546628 0.78196038 0.9778565 0.31985306

0.42377179 0.25546934 0.87459033 0.70945525]

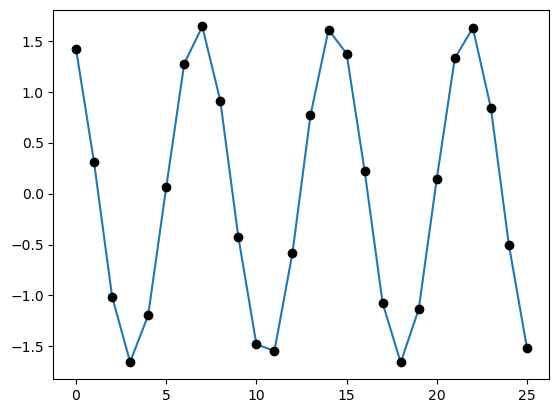

Let us apply it to the springs example from Section 6.1 of M. Newman Computational Physics

from pylab import plot,show

# Constants

N = 26

C = 1.0

m = 1.0

k = 6.0

omega = 2.0

alpha = 2*k-m*omega*omega

# Set up the initial values of the arrays

A = np.empty([N,N],float)

for i in range(N):

if i>0:

A[i,i-1] = -k

A[i,i] = alpha

if i<N-1:

A[i,i+1] = -k

A[0,0] = alpha - k

A[N-1,N-1] = alpha - k

v = np.zeros(N,float)

v[0] = C

# Solve the equations

x = linsolve_banded(*(get_banded(A,1,1)),v)

# Make a plot using both dots and lines

plot(x)

plot(x,"ko")

show()

Sparse systems#

Many problems in computational physics, such as finite element methods, computational fluid dynamics, and network analysis, lead to sparse linear systems where most of the coefficients in the matrix are zero. While some of these have a band structure and can be solved using band matrix methods, others have less regular structure and require more advanced techniques. There are many methods for solving sparse systems which go beyond the scope of this course but are available in libraries such as SciPy (Python), Eigen (C++), or Armadillo (C++).

QR decomposition#

QR decomposition#

Another useful decomposition of a square matrix \(\mathbf{A}\) is QR decomposition. Any real square matrix \(\mathbf{A}\) can be written in a form

where \(\mathbf{Q}\) is an orthogonal matrix, i.e. \(\mathbf{Q^{-1}} = \mathbf{Q^T}\) thus

and \(\mathbf{R}\) is upper triangular matrix.

There are many algorithms for constructing the QR decomposition, from simple Gram-Schimdt process to more involved methods using Householder transformation and Givens rotations. We will not go into detail into these methods as they are readily implemented in linear algebra packages.

A = np.array([[ 2, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

print('A =\n', tabulate(A))

Q, R = np.linalg.qr(A)

print('Q =\n', tabulate(Q))

print('R =\n', tabulate(R))

print('Q^T Q =\n', np.dot(Q.transpose(),Q))

A =

- -- -- --

2 1 4 1

3 4 -1 -1

1 -4 1 5

2 -2 1 3

- -- -- --

Q =

--------- ---------- --------- ----------

-0.471405 -0.0563436 0.875765 -0.0874017

-0.707107 -0.507093 -0.436141 -0.229429

-0.235702 0.732467 -0.141898 -0.622737

-0.471405 0.450749 -0.150604 0.742914

--------- ---------- --------- ----------

R =

-------- -------- -------- ---------

-4.24264 -1.41421 -1.88562 -2.35702

0 -5.91608 1.46493 5.46533

0 0 3.6467 0.150604

0 0 0 -0.742914

-------- -------- -------- ---------

Q^T Q =

[[ 1.00000000e+00 -4.51958521e-17 -1.57054640e-16 3.42526633e-17]

[-4.51958521e-17 1.00000000e+00 3.18817176e-17 8.09596522e-17]

[-1.57054640e-16 3.18817176e-17 1.00000000e+00 1.68578756e-16]

[ 3.42526633e-17 8.09596522e-17 1.68578756e-16 1.00000000e+00]]

Systems of linear equations with QR decomposition#

Suppose we have a system of linear equations

If \(\mathbf{A} = \mathbf{Q R}\) we can rewrite it as

Multiplying each sides of the equation by \(\mathbf{Q^T}\), we have

The matrix \(\mathbf{R}\) is upper triangular, thus, the system can solved using backsubstitution.

def linsolve_using_qr(Q,R,v):

# Initialization

N = len(v)

# Calculate y = Q^T v

y = np.zeros(N,float)

for r in range(N):

for c in range(N):

y[r] += Q[c][r] * v[c]

# Backsubstitution for R*x = y

x = np.empty(N,float)

for r in range(N-1,-1,-1):

x[r] = y[r]

for c in range(r+1,N):

x[r] -= R[r][c] * x[c]

x[r] /= R[r][r]

return x

A = np.array([[ 0, 1, 4, 1 ],

[ 3, 4, -1, -1 ],

[ 1, -4, 1, 5 ],

[ 2, -2, 1, 3 ]],float)

Q, R = np.linalg.qr(A)

v = np.array([ -4, 3, 9, 7 ],float)

x = linsolve_using_qr(Q,R,v)

print('x =', x)

print('Ax =', A.dot(x))

print('v =', v)

x = [ 1.61904762 -0.42857143 -1.23809524 1.38095238]

Ax = [-4. 3. 9. 7.]

v = [-4. 3. 9. 7.]

Eigenvalues and eigenvectors#

Eigenvalue problem#

A common matrix problem in physics is the calculation of eigenvalues and eigenvectors of a matrix (e.g. classical and quantum mechanics). The eigenvalue problem corresponds to the equation

In most cases the matrix \(\mathbf{A}\) is either real symmetric or Hermitian (in case of complex numbers). In this case, for a \(N\)x\(N\) matrix there are \(N\) eigenvectors \(\mathbf{v}_1 \ldots\mathbf{v}_N\) with real eigenvalues \(\lambda_1 \ldots \lambda_N\). The eigenvectors are orthogonal, i.e. \(\mathbf{v}_i \cdot \mathbf{v}_j = \delta_{i,j}\) with appropriate normalization. The eigenvalue problem can thus be cast as a matrix equation

Here \(\mathbf{V}\) is the matrix of eigenvectors, i.e. column \(k\) corresponds to the eigenvector \(\mathbf{v}_k\), and \(\mathbf{D}\) is a diagonal matrix with entries corresponding to eigenvalues, \(D_{ij} = \delta_{ij} \lambda_i\). Since eigenvectors are orthonormal, one has \(\mathbf{V^T V} = \mathbf{V V^T} = \mathbf{I}\).

QR algorithm#

QR algorithm is a method of finding eigenvalues and/or eigenvectors. It is based on the following iterative procedure.

One starts with matrix \(\mathbf{A}_1 = \mathbf{A}\) can calculates its QR decomposition \(\mathbf{A}_1 = \mathbf{Q}_1 \mathbf{R}_1\).

The next matrix is computed as \(\mathbf{A}_2 = \mathbf{R}_1 \mathbf{Q}_1\). This is a similarity transform. Multiplying both sides by \(\mathbf{I} = \mathbf{Q}_1^T \mathbf{Q}_1\) and taking into account \(\mathbf{A}_1 = \mathbf{Q}_1 \mathbf{R}_1\) one gets \(\mathbf{A}_2 = \mathbf{Q}_1^T \mathbf{A}_1 \mathbf{Q}_1\).

The process is repeated as follows, \(\mathbf{A}_{n+1} = \mathbf{R}_n \mathbf{Q}_n = \mathbf{Q}_n^T \mathbf{A}_n \mathbf{Q}_n\).

Matrix \(\mathbf{A}_n\) converges to diagonal form in the limit \(n \to \infty\) (for real symmetric \(\mathbf{A}\)). It can be written as

where

is an orthogonal matrix.

Multiplying \(\mathbf{A_{\infty}}\) by \(\mathbf{Q}\) from the right we get an equation

Comparing to the matrix eigenvalue equation \(\mathbf{A V} = \mathbf{V D}\) one recognizes that \(\mathbf{Q} \equiv \mathbf{V}\) is the matrix of eigenvectors and \(\mathbf{A_\infty} = \mathbf{D}\) is the eigenvector matrix.

In pracice, the algorithm stops once non-diagonal elements of \(\mathbf{A}_n\) are below a certain threshold \(\epsilon\).

def eigen_qr_simple(A, iterations=1000, toprint=True):

Ak = np.copy(A)

n = len(A[0])

QQ = np.eye(n)

for k in range(iterations):

Q, R = np.linalg.qr(Ak)

Ak = np.dot(R,Q)

QQ = np.dot(QQ,Q)

if k%100 == 0 and toprint:

print("A",k,"=")

print(tabulate(Ak))

print("\n")

return Ak, QQ

eigen_qr_simple([[1,2],[2,1]])

A 0 =

--- ----

2.6 1.2

1.2 -0.6

--- ----

A 100 =

---------- ------------

3 2.18743e-16

2.5871e-48 -1

---------- ------------

A 200 =

----------- ------------

3 2.18743e-16

5.01982e-96 -1

----------- ------------

A 300 =

------------ ------------

3 2.18743e-16

9.74008e-144 -1

------------ ------------

A 400 =

------------ ------------

3 2.18743e-16

1.88989e-191 -1

------------ ------------

A 500 =

---------- ------------

3 2.18743e-16

3.667e-239 -1

---------- ------------

A 600 =

------------ ------------

3 2.18743e-16

7.11518e-287 -1

------------ ------------

A 700 =

- ------------

3 2.18743e-16

0 -1

- ------------

A 800 =

- ------------

3 2.18743e-16

0 -1

- ------------

A 900 =

- ------------

3 2.18743e-16

0 -1

- ------------

(array([[ 3.0000000e+00, 2.1874317e-16],

[ 0.0000000e+00, -1.0000000e+00]]),

array([[-0.70710678, -0.70710678],

[-0.70710678, 0.70710678]]))

eigen_qr_simple([[1,1],[-1,3]])

A 0 =

- -----------

2 1.36716e-16

2 2

- -----------

A 100 =

---------- -------

2.02 -1.9998

0.00019998 1.98

---------- -------

A 200 =

----------- --------

2.01 -1.99995

4.99988e-05 1.99

----------- --------

A 300 =

---------- --------

2.00667 -1.99998

2.2222e-05 1.99333

---------- --------

A 400 =

----------- --------

2.005 -1.99999

1.24999e-05 1.995

----------- --------

A 500 =

----------- --------

2.004 -1.99999

7.99997e-06 1.996

----------- --------

A 600 =

----------- --------

2.00333 -1.99999

5.55554e-06 1.99667

----------- --------

A 700 =

----------- --------

2.00286 -2

4.08162e-06 1.99714

----------- --------

A 800 =

--------- -------

2.0025 -2

3.125e-06 1.9975

--------- -------

A 900 =

----------- --------

2.00222 -2

2.46913e-06 1.99778

----------- --------

(array([[ 2.002002e+00, 1.999998e+00],

[-2.004004e-06, 1.997998e+00]]),

array([[-0.70639861, 0.70781424],

[-0.70781424, -0.70639861]]))

n = 5

A = np.random.rand(n, n)

# Make symmetric

for r in range(n):

for c in range(r):

A[r][c] = A[c][r]

print("A=")

print(tabulate(A))

print("\n")

print("AA* = ")

print(tabulate(np.dot(A,A.transpose())))

print("\n")

print("A*A = ")

print(tabulate(np.dot(A.transpose(),A)))

print("\n")

# We call the function

AQ = eigen_qr_simple(A)

# Print A' = D

print("A' =\n",tabulate(AQ[0]))

print("Q =\n",tabulate(AQ[1]))

# We compare our results with the official numpy algorithm

# print(np.linalg.eig(A))

P = AQ[0]

# Check orthogonality

print("v1*v2 = ", P[:,0].dot(P[:,1]))

A=

-------- -------- -------- -------- --------

0.683929 0.592074 0.177584 0.693789 0.604136

0.592074 0.705272 0.448509 0.615471 0.311012

0.177584 0.448509 0.599568 0.229103 0.695169

0.693789 0.615471 0.229103 0.246438 0.130492

0.604136 0.311012 0.695169 0.130492 0.986837

-------- -------- -------- -------- --------

AA* =

------- ------- -------- -------- -------

1.69617 1.51706 1.07241 1.1294 1.4075

1.51706 1.52465 1.04759 1.13986 1.27606

1.07241 1.04759 1.12793 0.683787 1.37949

1.1294 1.13986 0.683787 0.990396 0.93076

1.4075 1.27606 1.37949 0.93076 1.93584

------- ------- -------- -------- -------

A*A =

------- ------- -------- -------- -------

1.69617 1.51706 1.07241 1.1294 1.4075

1.51706 1.52465 1.04759 1.13986 1.27606

1.07241 1.04759 1.12793 0.683787 1.37949

1.1294 1.13986 0.683787 0.990396 0.93076

1.4075 1.27606 1.37949 0.93076 1.93584

------- ------- -------- -------- -------

A 0 =

--------- ---------- ---------- ---------- ----------

2.28904 0.403831 0.354855 -0.31424 0.153568

0.403831 0.300969 0.0273823 0.166383 -0.185341

0.354855 0.0273823 0.902426 -0.0880704 0.226274

-0.31424 0.166383 -0.0880704 -0.252184 -0.0114637

0.153568 -0.185341 0.226274 -0.0114637 -0.0182059

--------- ---------- ---------- ---------- ----------

A 100 =

------------- ------------ ------------ ------------ ------------

2.49109 1.19383e-16 -3.18497e-16 7.23565e-17 -2.86681e-16

-1.75215e-46 0.877495 -1.56711e-16 -5.2497e-16 -9.55922e-17

-3.53332e-81 1.66639e-35 -0.38929 0.00254435 -4.84974e-17

3.38249e-84 -3.48368e-38 0.00254435 0.364736 -4.57125e-18

1.82972e-132 -3.14031e-86 2.06797e-51 5.12209e-49 -0.121984

------------- ------------ ------------ ------------ ------------

A 200 =

------------- ------------- ------------- ------------ ------------

2.49109 1.19383e-16 -3.18739e-16 7.12829e-17 -2.86681e-16

-8.49365e-92 0.877495 -1.54941e-16 -5.25495e-16 -9.55922e-17

-8.65964e-162 8.42501e-71 -0.389298 3.76897e-06 -4.84818e-17

1.228e-167 -2.60901e-76 3.76897e-06 0.364745 -4.73462e-18

1.79418e-263 -6.35228e-172 8.27389e-102 1.38348e-96 -0.121984

------------- ------------- ------------- ------------ ------------

A 300 =

------------- ------------- ------------- ------------- ------------

2.49109 1.19383e-16 -3.1874e-16 7.12813e-17 -2.86681e-16

-4.11735e-137 0.877495 -1.54939e-16 -5.25496e-16 -9.55922e-17

-2.12235e-242 4.25956e-106 -0.389298 5.58296e-09 -4.84817e-17

4.45817e-251 -1.95394e-114 5.58296e-09 0.364745 -4.73487e-18

0 -1.28495e-257 3.31035e-152 3.73677e-144 -0.121984

------------- ------------- ------------- ------------- ------------

A 400 =

------------- ------------- ------------- ------------ ------------

2.49109 1.19383e-16 -3.1874e-16 7.12813e-17 -2.86681e-16

-1.99591e-182 0.877495 -1.54939e-16 -5.25496e-16 -9.55922e-17

-5.43472e-323 2.15357e-141 -0.389298 8.27034e-12 -4.84817e-17

0 -1.46334e-152 8.26999e-12 0.364745 -4.73487e-18

0 0 1.32445e-202 1.0093e-191 -0.121984

------------- ------------- ------------- ------------ ------------

A 500 =

------------- ------------- ------------- ------------- ------------

2.49109 1.19383e-16 -3.1874e-16 7.12813e-17 -2.86681e-16

-9.67532e-228 0.877495 -1.54939e-16 -5.25496e-16 -9.55922e-17

0 1.08881e-176 -0.389298 1.25987e-14 -4.84817e-17

0 -1.09592e-190 1.22503e-14 0.364745 -4.73487e-18

0 0 5.29908e-253 2.72609e-239 -0.121984

------------- ------------- ------------- ------------- ------------

A 600 =

------------- ------------- ------------- ------------- ------------

2.49109 1.19383e-16 -3.1874e-16 7.12813e-17 -2.86681e-16

-4.69017e-273 0.877495 -1.54939e-16 -5.25496e-16 -9.55922e-17

0 5.50487e-212 -0.389298 3.66538e-16 -4.84817e-17

0 -8.20759e-229 1.81463e-17 0.364745 -4.73487e-18

0 0 2.12014e-303 7.36315e-287 -0.121984

------------- ------------- ------------- ------------- ------------

A 700 =

------------- ------------ ------------ ------------ ------------

2.49109 1.19383e-16 -3.1874e-16 -7.12813e-17 -2.86681e-16

-2.27359e-318 0.877495 -1.54939e-16 5.25496e-16 -9.55922e-17

0 2.78318e-247 -0.389298 -3.48418e-16 -4.84817e-17

0 6.14682e-267 -2.68799e-20 0.364745 4.73487e-18

0 0 0 0 -0.121984

------------- ------------ ------------ ------------ ------------

A 800 =

------- ------------- ------------ ------------ ------------

2.49109 -1.19383e-16 3.1874e-16 7.12813e-17 2.86681e-16

0 0.877495 -1.54939e-16 5.25496e-16 -9.55922e-17

0 1.40713e-282 -0.389298 -3.48392e-16 -4.84817e-17

0 4.60347e-305 -3.9817e-23 0.364745 4.73487e-18

0 0 0 0 -0.121984

------- ------------- ------------ ------------ ------------

A 900 =

------- ------------- ------------ ------------ ------------

2.49109 -1.19383e-16 3.1874e-16 7.12813e-17 2.86681e-16

0 0.877495 -1.54939e-16 5.25496e-16 -9.55922e-17

0 7.11425e-318 -0.389298 -3.48392e-16 -4.84817e-17

0 4.94066e-324 -5.89807e-26 0.364745 4.73487e-18

0 0 0 0 -0.121984

------- ------------- ------------ ------------ ------------

A' =

------- ------------- ------------ ------------ ------------

2.49109 1.19383e-16 -3.1874e-16 7.12813e-17 2.86681e-16

0 0.877495 -1.54939e-16 -5.25496e-16 9.55922e-17

0 -4.94066e-324 -0.389298 3.48392e-16 4.84817e-17

0 -4.94066e-324 -9.32491e-29 0.364745 4.73487e-18

0 0 0 0 -0.121984

------- ------------- ------------ ------------ ------------

Q =

-------- --------- ---------- ---------- ---------

0.497045 0.316077 -0.553474 0.540854 -0.232777

0.471589 0.373493 -0.0934707 -0.473293 0.636681

0.387313 -0.422712 -0.25202 -0.587414 -0.512576

0.351922 0.427685 0.71898 -0.0181452 -0.419494

0.506646 -0.631663 0.323238 0.3716 0.31897

-------- --------- ---------- ---------- ---------

v1*v2 = 2.973931329883532e-16